Data and chunk sizes matter when using multiprocessing.Pool.map() in Python

[Note: This is follow-on post of an earlier post about parallel programming in Python.]

In Python, multiprocessing.Pool.map(f, c, s) is a simple method to realize data parallelism — given a function f, a collection c of data items, and chunk size s, f is applied in parallel to the data items in c in chunks of size s and the results are returned as a collection.

In cases where f depends on auxiliary data, one option is to extend f with an additional parameter for auxiliary data and apply this extended version of f to each data item along with the auxiliary data. Another option is to initialize f with the auxiliary data and apply this initialized version of f to each data item.

The below snippet explores both these options. The first option is realized in without_initializer method (lines 47–48, 58, and 61) while the second option is realized in with_initializer method (lines 15–24, 34, and 37). In both realizations, default chunk size is used by not providing a chunk size (lines 37 and 61).

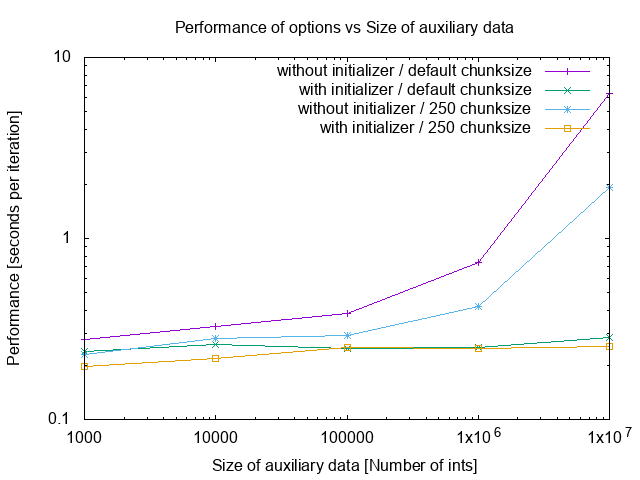

Since both options look almost identical in structure, it is reasonable to assume they would have similar performance characteristics. However, executing both options proves this assumption is wrong.

As the size of auxiliary data changes from 100K ints to 10M ints, the performance of the first option changes drastically for the worse (clearly non-linear) whereas the performance of the second option hardly changes (almost linear). Comparatively, the second option was at times ~20 times faster than the first option.

python3.6 test_pool_map.py without_initializer

aux_data 1,000 ints : 0.276938 secs per iteration 9999000

aux_data 10,000 ints : 0.328476 secs per iteration 9999000

aux_data 100,000 ints : 0.382901 secs per iteration 9999000

aux_data 1,000,000 ints : 0.736724 secs per iteration 9999000

aux_data 10,000,000 ints : 6.290008 secs per iteration 9999000python3.6 test_pool_map.py with_initializer

aux_data 1,000 ints : 0.236063 secs per iteration 9999000

aux_data 10,000 ints : 0.258173 secs per iteration 9999000

aux_data 100,000 ints : 0.247279 secs per iteration 9999000

aux_data 1,000,000 ints : 0.248649 secs per iteration 9999000

aux_data 10,000,000 ints : 0.283174 secs per iteration 9999000

All things being equal, poor performance of the first option could be due to

- Passing of auxiliary data as an additional argument along with each data item or

- Default chunk size used for map method.

So, identify the extent of these reasons, I changed the chunk size to 250 (on lines 37 and 61) and executed the options.

As expected, the chunk size did make a difference as evident in both graph (see above) and the output (see below). The performance of the first option improved by a factor of up to 3. Even so, the second option was at times ~7 times faster than the first option.

python3.6 test_pool_map.py without_initializer

aux_data 1,000 ints : 0.228136 secs per iteration 9999000

aux_data 10,000 ints : 0.278656 secs per iteration 9999000

aux_data 100,000 ints : 0.290525 secs per iteration 9999000

aux_data 1,000,000 ints : 0.421532 secs per iteration 9999000

aux_data 10,000,000 ints : 1.912790 secs per iteration 9999000python3.6 test_pool_map.py with_initializer

aux_data 1,000 ints : 0.196221 secs per iteration 9999000

aux_data 10,000 ints : 0.216774 secs per iteration 9999000

aux_data 100,000 ints : 0.248915 secs per iteration 9999000

aux_data 1,000,000 ints : 0.245638 secs per iteration 9999000

aux_data 10,000,000 ints : 0.251745 secs per iteration 9999000

[The number 9999000 in the last column is the result of the map operation on the last iteration and it can be ignored for this performance evaluation.]

If you want to use multiprocessing module in Python to write data parallel code, then keep the size of the data items small and use initialization support, if possible. Also, pick a chunk size that is good for your data set; don’t rely defaults.